Day One Center for Automotive Research Management Briefing Seminar 2018

|

By Thom Cannell

Senior Editor And Technology Lead

Michigan Bureau

The Auto Channel

Traverse City MI July 30, 2018; Each year for the past twelve, CAR (Center for Automotive Research) has gathered cross-disciplinary engineers, managers, scientists, and government officials to deliver impressions of state-of-industry briefings. This year, as should be expected, the focus is on connected vehicles (whether dedicated or a simple 3G or 4G entertainment connection—for now), automated driving, Advanced Driver Assistance Systems (ADAS), and autonomous vehicles.

Seemingly, every news story about the automotive industry mentions ADAS or autonomy. While much is forward thinking (hype), some is real, particularly the drive to decrease accident and their injuries and deaths. It will be a struggle; there is only a patchwork of applicable laws and every state and municipality wants their needs to be addressed. This is, for once, a job for our legislators in Washington.

We attended several sessions on connected and automated vehicles, their technology, surrounding laws, tests and pilot programs, and more. These are some comments torn from our notes, hopefully helpful and not entirely random.

Compared to a spanking new Boeing 787, which requires 15 million lines of computer codes to direct it’s by-wire control systems and navigation, a next-generation connected car will require 100 million lines of code and a fully autonomous vehicle will require 300 million lines of code, according to Ami Dotan, CEO and co-founder of Karamba Security. There will be, from his estimates, 180,000 bugs, or errors, in the 300 million lines of code.

Unfortunately, these errors and other potential openings, like pathways from the infotainment system, and telematics connections leave vehicles vulnerable to hackers. You recall the 2015 exploit when a Jeep was (safely) hacked and driven off the road despite the driver? With our future, literally nobody knows the unknown unknowns. His solution is to make the ECUs, or microcontroller processors, unavailable to outside, unauthorized intrusion. Within the industry, there are many potential solutions.

NVIDIA, the same company famous for game machine controllers, is a leader in Graphic Processor Units (GPUs). Danny Shaprio, director of their automotive unit, explained how they have created massive linked GPUs that can, when supplied with solid data, “teach” the control systems used for automated and autonomous vehicles to drive safely. Instead of driving endless miles and accumulating the rarest problems, like kids darting out from cars, or errant bicyclists, these situations can be simulated and repeated over, and over, and over to facilitate what is called Deep Learning for the AI (Artificial Intelligence) that will guide these advanced vehicles. (See Article Below)

Every state wants to be involved in this revolution in transportation, if for no other reason than to collect fee on permits, or to grow test facilities and the accompanying jobs. As expected, Michigan has a bit of knowledge. Michigan has a whole proving ground, supported by universities, suppliers, and automakers, near Detroit. Michigan’s Department Of Transportation has pilot projects strewn about the greater Detroit area, testing various responses to vehicle congestion, curve warnings (stalled or slowed traffic), pavement condition, and weather.

Weather warnings, if your car could receive them and act automatically, might prevent those massive pile-ups so often reported. Do you recall any that weren’t associated with bad weather? And the state has enacted legislation that will permit truck platooning, which improves fuel economy for the front truck 4-percent and the follower 8-percent. Which is huge.

Maybe the most interesting information from MDOT was that it could easily, and cheaply, integrate the latest telematics infrastructure into new intersections. Their cost is $200,000 each, so the added radio transceiver is a small added cost. Incidentally, Michigan is setting up fiber optics connectivity for its systems to prevent future hackers from penetrating deep into the state government.

In cooperation with 3M, MDOT has embedded hidden bar codes into road signs. These signs could, in future, be picked up by radar, Lidar or cameras and point to a changeable database that would deliver, for instance, road warnings or weather alerts. Also, experiments show that cameras mounted on traffic lights can deliver 1-2 second alerts to smart vehicles, far better than the vehicle itself trying to determine traffic.

Audi, with its viewpoints delivered by Audi of America’s director of connect vehicles Anupam Malhotra, surprised us by saying highly automated vehicles are not an industry disruptor, as many have claimed. “It is a feature we can sell to our customers,” he said. Audi’s focus is on emissions reduction, reduction in human-caused accidents and congestion reduction. Pom says 40-percent of space in major cities is devoted to parking, mobility anyone?

And what about those who have no license, are too young, or are disabled? This is, Audi thinks, an opportunity for mobility solutions, not personally owned vehicles (though recent “rent my car” schemes might prevail). “While the shared model is growing fast, there is no viable business plan, yet,” he said

You’d think that an organization like SEMA, the Specialty Equipment Manufacturers Association who provide chrome wheels, larger turbochargers, parts for your vintage auto and more, would be disinterested in mobility. Nothing is further from the truth. In fact the website autonomous stuff shows that SEMA has generated 25 spinoff companies based on new technology. And, they’re vitally interested in robo racing, completion where the better algorithm, no the bigger engine, will prevail.

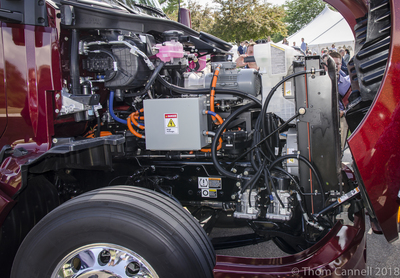

As we were departing, Toyota unveiled their latest fuel cell vehicle (FCV), not a Camry or Mirai, but a Kenworth-based semi! The new, and second generation, semi is destined to continue proof-of-concept at the ports of Long Beach and Los Angeles. This ZEV, or zero emissions vehicle is a Class 8 truck with a 200-mile range between fills with hydrogen and produces 13,25 pounds feet of torque from a 670-horsepower electric engine. The V2.0 FCV uses two fuel cell stacks from the Mirai SUV and a 12 kW hour battery. Gross combined weight capacity, important for its duty hauling cargo, is 80,000 pounds. The vehicle, complete with its own badges, is a product of both Toyota engineering and the engineering company Ricardo.

A Sixth Sense for Self-Driving Cars: Startup Builds Intuition into Driverless Vehicles

You can learn a lot about a person in just one glance. You can tell if they’re tired, distracted or in a rush. You know if they’re headed home from work or hitting the gym.

And you don’t have to be Sherlock Holmes or Columbo to make these types of instant deductions — your brain does it constantly. It’s so good at this type of perception, in fact, you hardly realize it’s happening.

Using deep learning, Perceptive Automata, a startup spun out from Harvard University, is working to infuse this same kind of human intuition into autonomous vehicles.

Visual cues like body language or what a person is holding can provide important information when making driving decisions. If someone is rushing toward the street while talking on the phone, you can conclude their mind is likely focused elsewhere, not on their surroundings, and proceed with caution. Likewise if a pedestrian is standing at a crosswalk and looks both ways — you know they’re aware and anticipating oncoming traffic.

“Driving is more than solving a physics problem,” said Sam Anthony, co-founder and chief technology officer at Perceptive Automata. “In addition to identifying objects and people around you, you’re constantly making judgments about what’s in the mind of those people.”

For its self-driving car development, Perceptive Automata’s software adds a layer of deep learning algorithms trained on real-world human behavior data. By running these algorithms simultaneously with the AI powering the vehicle, the car can garner a more sophisticated view of its surroundings, further enhancing safety.

A Perceptive Perspective

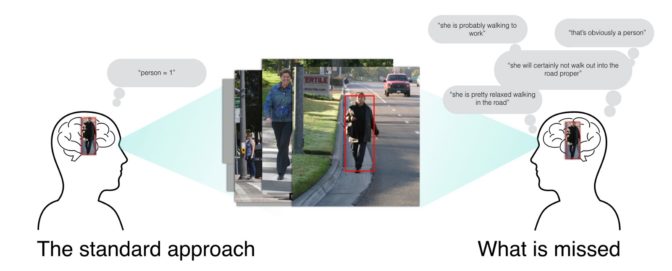

To bolster a vehicle’s understanding of the outside world, Perceptive Automata takes a unique approach to training deep learning algorithms. Traditional training uses a variety of images of the same object to teach a neural network to recognize that object. For example, engineers will show deep learning algorithms millions of photos of emergency vehicles, then the software will be able to detect emergency vehicles on its own.

Rather than using images for just one concept, Perceptive Automata relies on data that can communicate to the networks a range of information in one frame. By combining facial expressions with other markers, like if a person is holding a coffee cup or a cellphone, the software can draw conclusions on where the pedestrian is focusing their attention.

Perceptive Automata depends on NVIDIA DRIVE for powerful yet energy-efficient performance. The in-vehicle deep learning platform allows the software to analyze a wide range of body language markers and predict the pathway of pedestrians. The software can make these calculations for one person in the car’s field of view or an entire crowd, creating a safer environment for everyone on the road.

Humanizing the Car of the Future

Adding this layer of nuance to autonomous vehicle perception ultimately creates a smoother ride, Anthony said. The more information available to a self-driving car, the better it can adapt to the ebb and flow of traffic, seamlessly integrating into an ecosystem where humans and AI share the road.

“As the industry’s been maturing and as there’s been more testing in urban environments, it’s become much more apparent that nuanced perception that comes naturally to humans may not for autonomous vehicles,” Anthony said.

Perceptive Automata’s software leverages diversity, incorporating sophisticated deep neural networks into the driving stack, to offer a safe and robust solution to this perception challenge. With this higher level of vehicle understanding, self-driving cars can drive smarter and safer.